Draw Me an Electric Sheep

If you want to teach the machine to draw a sheep using AI/ML you will simply show it thousands and thousands of drawings of sheep, and eventually the machine will learn and work things out.

“If you please — draw me a sheep!”

— From “The Little Prince” by Antoine de Saint-Exupéry

Do you remember The Little Prince asking the pilot to draw him a sheep? After many attempts; however, the child was not satisfied with the drawings. Being over it, the pilot drew a box and told the prince to imagine the sheep in it. With his creativity, the Little Prince can see it lying down sleeping. It seems that drawing a sheep is not as easy as one might think :)

What if we want to teach a machine to draw an electric sheep?

The alleged sheep contained an oat-tropic circuit; at the sight of such cereals it would scramble up convincingly and amble over.

— “From Do Androids Dream of Electric Sheep?” by Philip K. Dick.

How to teach a machine to draw (electric) sheep?

One option to teach the machine is by programming it using your favorite programming language, that is, defining explicit, step-by-step instructions for the computer to follow.

For instance, the program would have methods to sketch its main characteristics: head, face, hears, coat of fleece on its body, four legs, a tiny tail, its color, etc. Although possible, this program will probably be cumbersome to write and the results will most likely not be approved by critics like the Little Prince.

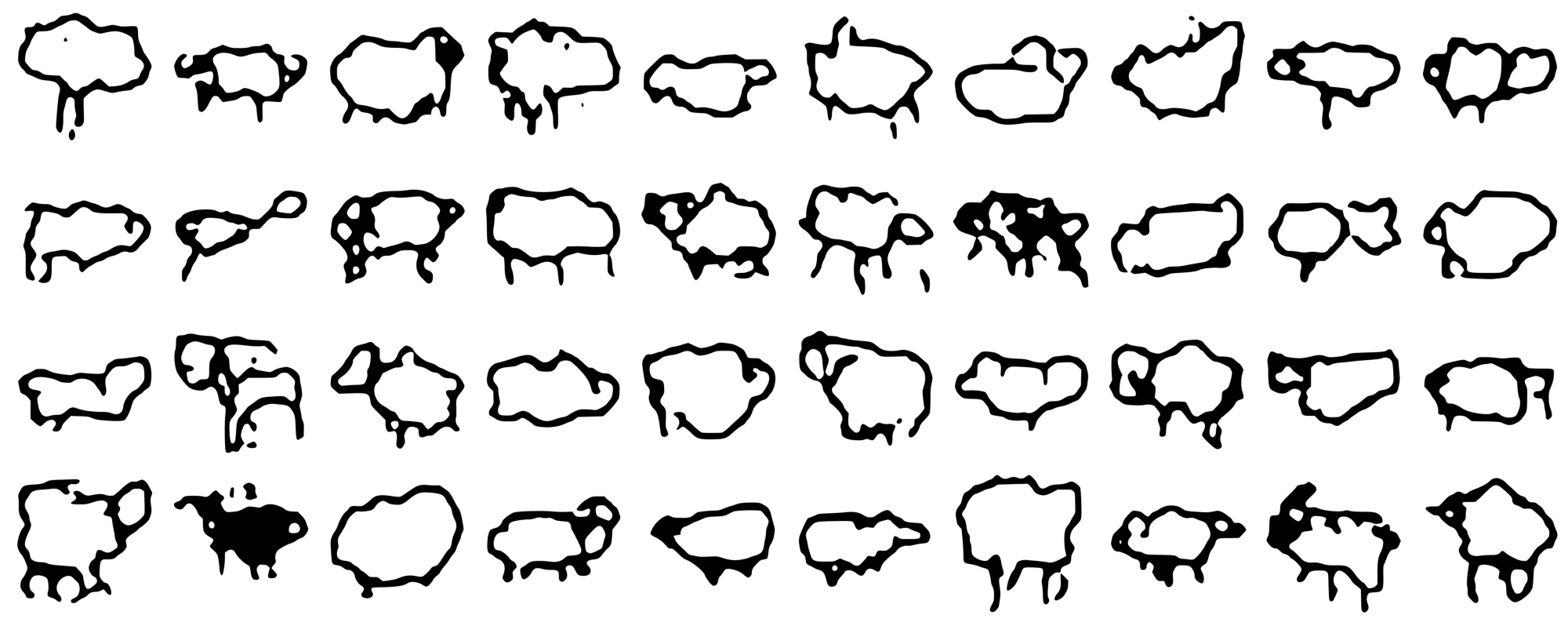

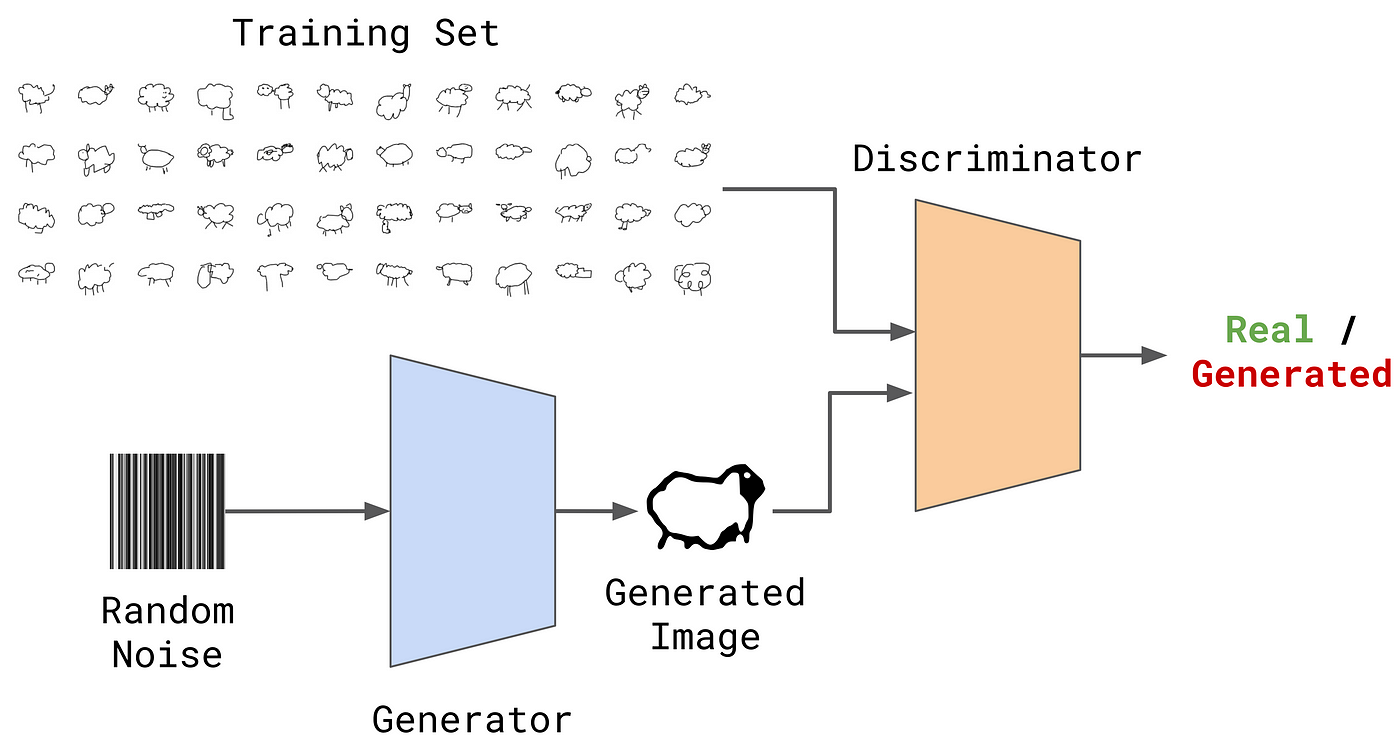

But most importantly, we humans are imperfectly ingenious sketching sheep, remember The Sheep Market project? or have a look to some of the tens of thousands sheep doodles made by real people in The Quick, Draw! experiment (see Figure 1).

All such variants and diversity humans express when sketching a sheep would be extremely difficult to capture in a program written in Python, Java, or C++. We probably would be better off writing a program that draws a box and claims that the sheep is in it :)

AI/ML to the rescue

Artificial Intelligence based on Machine Learning (AI/ML) offers an interesting alternative: instead of encoding the machine with instructions, let’s train it.

If you want to teach the machine to draw a sheep using AI/ML, you won’t tell it to draw the head, then the body with fleece, the face, and eyes. You will simply show it thousands and thousands of drawings of sheep, and eventually the machine will learn and work things out. If it keeps failing, you don’t rewrite the code. You just keep training it.

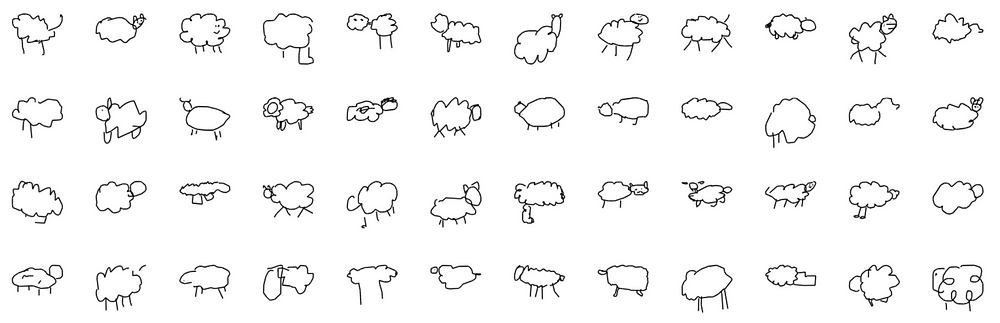

For this exercise, we will focus on a AI/ML algorithm named Generative Adversarial Networks (GAN). GANs are implemented by a system of two deep neural networks contesting with each other in a zero-sum game framework. The two players in the game are called Generator and Discriminator.

The Generator, as its name implies, generates candidates and the Discriminator evaluates them. The generative network’s training goal is to produce novel synthesized instances that appear to have come from the set of real instances, whereas for the discriminator, its goal is differentiating between true and generated instances.

You can think of an art analogy of an apprentice-master relationship, where from an empty canvas, the apprentice (Generator) will produce paintings that the master (Discriminator) will judge based on her experience and previous knowledge as real pieces of art or not.

The apprentice will get better and better based on the master’s feedback, until reaching a level where the master will not be able to discriminate between her apprentice’s work and a good piece of art.

GANs have shown very impressive results, for example, generating photo-realistic images as the ones shown in Figure 2.

Drawing Electric Sheep with GANs

“That doesn’t matter. Draw me a sheep…”

— From “The Little Prince” by Antoine de Saint-Exupéry

Very well, coming back to our task at hand, our goal is to train a GAN to produce human-level sheep drawings. The following figure illustrates our approach:

In a nutshell:

- The Dataset. As training set we use the sheep dataset from The Quick, Draw! experiment.

- Architecture. We use a Deep Convolutional Generative Adversarial Networks or DCGAN to implement our approach.

- Deep Learning Tools. We use Keras with TensorFlow backend for the model implementation and JupyterLab for prototyping.

- Models. After the Generator and Discriminator models are trained, we save them independently.

- From random noise to sheep. We then use the Generator to produce sheep drawings from random noise. The results are as good (or as bad and sketchy) as the ones produced by humans ;)

“Do androids dream?”

― Philip K. Dick, Do Androids Dream of Electric Sheep?

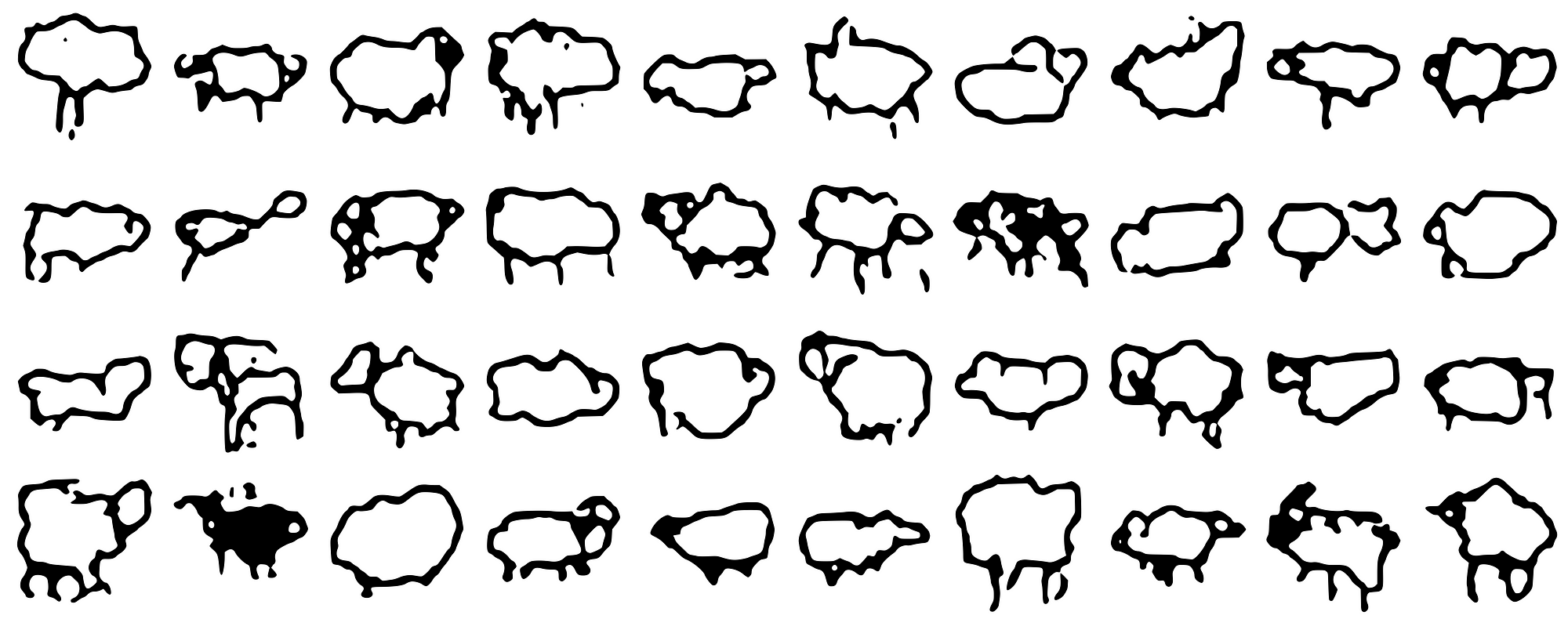

Figure 4 shows a sample of the sheep our AI/ML model generates — an android dream?

They do not look like the celebrities in Figure 2, but, aren’t they cute ❤ ?

One of my favorites is in row 0, column 2 (zero-based indexing), yes that one facing right with the black face :) … What’s yours?

~ Fin ~

About the author

Dr. Ernesto Diaz-Aviles is the Co-Founder and CEO at Libre AI. Ernesto used some of the ideas in this post to teach his daughter about AI/ML and to draw her as many electric sheep as she demanded, without using the sheep-in-the-box trick. She likes some of them.

Notes

- In the paper A Neural Representation of Sketch Drawings,by David Ha and Douglas Eck (2017). https://arxiv.org/abs/1704.03477 , a recurrent neural network (RNN) is used to construct drawings based on path strokes and not pixels. We instead used a GANs, since we think it helps the explanation based on the analogy of apprentice-master.

- Our model uses pixel-level information for the images for training and for generating new instances. In order to improve the image quality, we convert the output bitmaps into vector graphics (SVG) using Potrace.

∎